CLINICAL VARIATION INSIGHT: PALLIATIVE CARE CONSULTS

- Natasha Hagemeyer, PhD

- 05/19/2020

Part II is a deeper discussion of the methodology that we use to help hospitalists ensure that patients who are most in need of palliative care get it.

Context

Agathos is a transparency platform that delivers action-level insights on practice variation directly to physicians, ensuring informed decision-making about patient care. Palliative care consults are particularly ideal for Agathos feedback since they involve best practices with accumulating evidence yet lagging adoption and a degree of discretion and “grey area” on just when to use them.

We sought to provide physicians with information on the frequency with which they utilize the resources available to them—how often they consulted palliative care teams—when such care might be warranted. By giving physicians an understanding of how they are utilizing palliative care consults in comparison to their peers, we could empower physicians to make their own decisions and self-regulate their practice patterns.

Methodology

Palliative care is appropriate only for patients who are severely ill or in need of end-of-life care. Physicians may have highly variable volumes of patients for whom such care is appropriate, leading to different proportions of patients receiving palliative care even if the physicians are using the same heuristics for ordering consults. To present physicians with fair assessments of their palliative care utilization, we chose to restrict the denominator of the benchmark to only those patients who were likely to be palliative-appropriate.

To accomplish this key step, we used machine learning to determine whether patients were palliative-appropriate. Machine learning is a powerful approach for classifying complex data, with the ability to “learn” patterns by using a set of previous, accurately coded data to classify new incoming information. Using a random forest classifier, we were able to use prior clinical decisions about which patients were given palliative care to predict whether current patients who did not receive palliative care should have received it.

This classification methodology builds a series of decision trees on random subsets of the data, averaging the results. This approach prevents any outliers in the data from leading to false conclusions. Each decision tree splits the data on binary decision points to classify the data. For example, the decision tree might have a split where comorbidities are present versus absent, separating the data into separate buckets. The data is further refined by subsequent decision points. This was the first Agathos insight using machine learning to control for case-mix, making this a particularly exciting technical advancement.

To build the training data for the machine learning model, we relied on prior clinical decisions. Upon presentation or within the first ten days of their stay, if a patient received a palliative care consult, was discharged to hospice, or expired, we considered them palliative-appropriate upon entering the hospital. Patients who fit these criteria later in their stay, beyond ten days, were likely to have developed a need for palliative care during their hospitalization. After reviewing with our medical advisors, we decided it was appropriate to restrict to events within the first ten days and to exclude patients who became palliative-appropriate later on in their stay. This cutoff was deemed appropriate because most patients would not deteriorate to the point of needing palliative care when they presented not requiring it.

In order to determine whether palliative-appropriate patients were missed by hospitalists upon presentation or within the 10-day hospital stay, we found and matched patients who fit the same profile as others who were determined to be palliative-appropriate using the random forest classifier. Our random forest classifier used information available to the provider when the patient was under their care: comorbidities, vitals, and selected lab results. For assessment of comorbidities, we used the Elixhauser Comorbidity Index, a widely accepted method of categorizing comorbidities of patients based on ICD-10 (International Classification of Diseases) diagnosis codes.1 The Index can be used to predict hospital resource utilization and in-hospital mortality, with higher accuracy than other pre-existing comorbidity indexes.

We classified patients as either palliative-appropriate or palliative-inappropriate. The output from our machine learning model provided us with a probability of whether each patient was palliative-appropriate. We chose a strict cutoff where the model provided a true positive rate of 65%—that is, the model correctly identified patients who received palliative care 65% of the time. This limited the number of false positives, or patients identified as palliative-appropriate who were not. Any patient who fit the prior criteria was considered palliative-appropriate, as were any patients the model classified as palliative-appropriate. We then determined the rate of ordering palliative care consults by each provider for the entire patient population.

For each of the first 10 days of the patient’s stay where a palliative care consult had not previously been ordered, we determined who was the primary attending physician for the patient, based on attending records and progress notes. Where progress notes and attending records were inconsistent, the progress notes determined the primary attending physician.

If a provider was the primary attending physician on the day that a palliative care consult was ordered, they were documented as ordering a palliative care consult for that encounter. Conversely, if a provider was a primary attending physician on one or more days for a palliative-appropriate patient, but there was not a palliative care consult on any day that they were the primary attending physician, they were documented as not ordering a palliative care consult for that encounter. In effect, where there were multiple primary attending physicians in the first ten days, there was many-to-one attribution of physicians to palliative-appropriate patients.

Display

Agathos periodically (i.e., once every 3-6 months) sends each hospitalist a personalized text message insight on their palliative care utilization patterns directly to their personal mobile device. Physicians are then directed into a web-enabled mobile application where they can review information about the insight, peer comparison graphs, historical trends, and relevant examples of patients for whom they ordered palliative care.

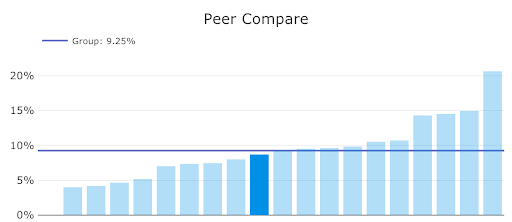

In the example below, a physician who has a utilization similar to the group’s mean is viewing the application. They are able to see not only their own score, but those of their peers, in order to compare their performance.

Discussion

Delivering information about palliative care utilization to physicians is an important and—particularly in the time of COVID-19—urgent tactic to improve utilization of palliative care. As demand for palliative care increases, clinicians are increasingly charged with determining how to appropriately use palliative care resources, despite a lag in education on best practices. Our approach is to close this knowledge gap by empowering physicians through peer comparison to help reduce variation and increase utilization of palliative care.

As in this case, clinical best practices can change over time due to several factors, including advances in medical knowledge, changes in population health, and economic factors that change the costs and benefits of a given decision. The effectiveness of quality improvement and variation reduction initiatives hinges on a data-driven and user-centric approach:

- pull data from clinical records

- calculate physician metrics with a convincing attribution methodology

- prioritize findings among all other possible insights

- deliver feedback in an engaging, persuasive, respectful way

Curious about your group’s clinical variation, on palliative care utilization or otherwise?

Reference

- Elixhauser A, Steiner C, Harris DR, Coffey RM. Comorbidity measures for use with administrative data. Med Care 1998;36:8-27.

Natasha is a published academic, researcher, and a Data Scientist working for Agathos. She cut her teeth conducting research in behavioral ecology. She currently leverages her data expertise to identify patterns and insights in healthcare data for improved patient care.

About Author

Natasha Hagemeyer, PhD

Natasha is a Staff Data Scientist with Agathos and holds a doctorate in ecology. Previously, she conducted research in behavioral ecology. Currently, she leverages her data expertise to identify patterns and insights in healthcare data for improved patient care.